Short Blurb

A Free-to-play mobile, multiplayer role-playing game. It is one of my favorite projects, one of my proudest ones because it is a fully fledged production backend system that involved UI, backend, devops, cloud, a hybrid monolithic architecture (we could not yet justify the cost for a microservices architecture but there is a break point where we would pivot over if it made sense).

Architected, developed and deployed a scalable backend platform architecture to serve AI-generated themed art and storylines (i.e. game assets) using Python and FastAPI, leveraging AWS services such as EC2, Lambda (Python), S3, CloudFront, Simple Email Service, Simple Notification Service, API Gateway (HTTP/REST APIs) and DynamoDB.

Optimized cloud infrastructure costs (100% improvement), and latency (30% improvement) services hosted on AWS.

Developed a custom Stable Diffusion custom model into an API service for demos.

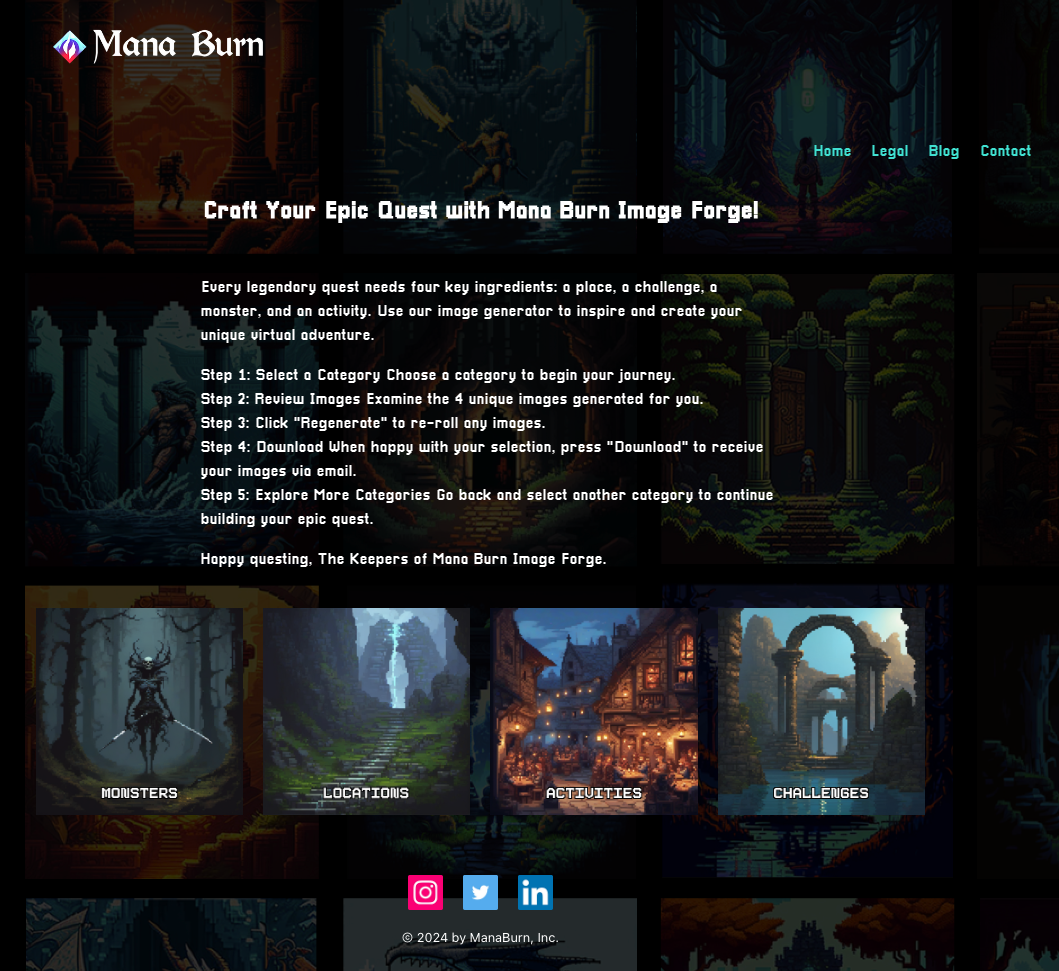

Launched a new marketing tool within the Google Accelerator program that successfully attracted 15% more users during the pilot phase; implementation utilized Typescript, React, and Vue to ensure dynamic and responsive user experience. Sample of tool: https://app.manaburn.gg/

Tech talk:

We had the image generation and when I came onboard, I fell in love with the art generation. I could see a utility for it in tabletop games and such, the key to it then was that everything fit within a theme. At that time, AI generated art was all over the place.

I proposed a data pipeline to build out a platform to sell generated art, storylines and such to speed up developing games.

Started the MVP on Python and something obscure and found FastAPI and decided it was a better way to go. I deployed it on AWS EC2 instances via Docker for GDC so the founder could peddle it for his meets at GDC. We did not have a frontend so I trained the executive team to demo it via Postman Flows, with an elastic IP for GDC with an upgraded EC2 instance (we paid for a g4dn.2xlarge for fast generation of the images and storyline), deployed using Docker and stored images in AWS S3.

More about the code, habits from banking, data is so important and ensuring that data is not corrupted or manipulated during transit to and fro is a key concern. There was a possibility of doing web3 crypto assets and sale, and not being sure how valuable this AI generated art was to recreate (I was able to recreate it via Runpod in this post)

Back to data, I used pydantic and a model/data schema to ensure that we would only use data from the database, and stored data in events of failure (or at least recoverable data for mitigation) if needed. But in the AWS ecosystem, there was less of this need as it was within the AWS ecosystem. I was not going to set up our own infrastructure and support those costs until we absolutely needed to.

After GDC, I migrated our implementation over to AWS Lambda (as a startup, as a company, we get 1million free Lambda calls per month which is sufficient for development and demo, on top of that we kept our eyes out and applied for whatever accelerator free credits we can get) It also solved my concerns wth ramp-up, routing and security with the current implementation of the architecture (MVP). But with this migration, I added integrations to S3 (for storage and amplify deploy), Cloudfront (for caching), SES (for email), SNS (for notifications), API Gateway (for routing), DynamoDB (for storage, not the fastest but the cheapest) and Cloudwatch (for monitoring).

I was not able to prevent downloads by right-clicking via mobile, it would require more time than I had with constant devops I was doing supporting the team and development. It is preventable on the desktop but not on mobile. We explored putting a watermark on our images as they were being sent out and decided to add that as part of our image generation flow instead of delivery (to requester) flow.

Our costs ran about $10-30/mth, I further optimized by training whomever is willing on prompt engineering to optimize our token usage, in addition a caching strategy to further reduce our ongoing OpenAI API costs. I explored finetuning, rag training, query chaining to try to eek out a more compelling storyline, reading research papers from universities on what parts researchers have determined is required to train one - the studies were extensive, mostly catered towards D&D (low hanging fruit as the downside risks are low, the rules are well-defined). It reminded me of why the best cutting edge tech always stems from games.

The components required for a good storytelling AI LLM are: Literature Review, Plot Development, Character Development, Storytelling, Writing Style. Story Planning.

The boundaries set within our team were that there is a technical art aspect - they continued to refine and develop the magical mix of loras that makes our art look good. I lifted that portion into a backend service. I worked on the backend itself, along with devops and monitoring, and also working on the storytelling generative AI portion. I

Most of the analysis (a detailed analysis of technical decisions, justifications for the architecture and addressing possible foreseeable direction of growth and concerns) are encapsulated in documents (lots of documents) that I wrote during my tenure there. This wasn’t just about solving short-term problems-it was about creating a scalable, maintainable foundation for the company’s future. My approach to technical work is always long-term. Even if I step away from a project, I ensure that my contributions are well-documented and transparent with no gate-keeping for any technical talent we may choose to onboard. We ended up using this as part of our package for VCs - document - Ariel is credited for the image generator, our CTO is credited for draft revisions and gearing the document towards what the VCs may need to see.

We got accepted to the Google Accelerator, in which the ask was a frontend to showcase and leverage the backend, it was my first foray into the frontend in a while so the programming is a bit clunky. Manaburn Meme Generator

The meme generator is deployed on AWS Amplify via a custom subdomain of one we already owned. Initially, I deployed it by dropping a zipfile in AWS Amplify but I found it tedious once the codebase was a bit more stable, so I found and integrated Github Actions to autodeploy. In subsequent deploys, I used AWS CLI and a cloudformation stack.

I also started implementing a config server within AWS as we needed to store and share several API keys between our meme generator, mobile gaming app, and backend.

I am recreating the apis in a random tabletop game I picked up - Sweaters for Hedgehog - in this post here and got sidetracked by image generation and creating a good process and environment to journal my dev journey. I created a discord bot for it but decided against deployment because when further simplified, I realized it can be resolved by a bunch of if/else and switch statements instead of wasting resources deploying and hosting it as an api. Ultimately, it makes no sense to create for the sake of creating. It is in TBD status.

Reflections

In hindsight, I would have just used Netlify and hooked it up to github, which I am doing present day for my personal projects. It’s free.

I would also have used DiagrammingAsCode to do my architecture diagrams and check it in as code, part of the documentation with codebase.

I did not like the logging in CloudWatch, at all. It was not the level of visibility I was looking for, perhaps due to cost constraints, I was not able to customize it too much, but I was paying attention to user usage and set up alerts for when it blew up. I did not have the time to set up a full observability stack, but I would have used an equivalent of Splunk to parse logs and set up alerts and dashboards for ease. Speaking of which, I should implement a Grafana dashboard of my own for my personal projects.

I ended up working with our CTO on what he needs to sell this platform, within the timelines I can manage and a process for Ariel to iteratively generate the images. I ended up doing a pass and abstracting out a lot of the config so other people can iteratively prompt engineer whatever they need for a demo. The codebase could be iteratively improved on (the gen AI libs iterate and release new versions every 4-6 weeks) and shortened. Admittedly, I delved into a random number generator to generate the random seed for the image generation, I am expecting no faster than 1ms per image (we did not get faster than 3seconds per image set (each image has a depth field and 3 other layers to give it dimension) hardware availability but our pipeline needs do not require that optimization - I also looked into batch generation that was not built into the libs but we did not need it then as well.

I did have to optimize and minimize the libs we were using in order to include it as a layer for AWS Lambda since Lambda did not come with those libs and the libs were too large in size for Lambda layers. It was a balance between number of API lambda calls and the runtime per lambda function as well - I had to find the sweet spot in terms of cost.

Conclusion

I really loved working on Manaburn, it was a great team, and an exceedingly creative team. It is refreshing to work with creatives after being at a bank for 16 yrs, I loved the energy and the passion they had for the game and I loved being able to create, architect and just run with things. I did end up having to lay down some processes so we don’t step over each other’s work - even with established domain boundaries, there was some intersects - and implemented some utility/dev tools so that the art and creativity can be iteratively implemented without my involvement. I have learnt to appreciate the processes and standards set in place at the bank - we never see the problems we do not experience/sidestep.

I learnt a fascinating amount by reading about how to train an LLM for storytelling. It is not much different than training an individual or a child - it sticks when it is layered on, corrected, and refined. The data has to be clean, and so much of the data we iterate on as individuals is random, colored, non-contiguous, and not well-defined. The invisible, unappreciated work is in the clarity and organization of all this data.

I did end up loving the research and backend work, it reminded me of how deep my expertise ran that I took for granted as “basic”. In hindsight, fast prototyping is more important than building out a system that will stand (documentation included) in the event that they want a different developer in place, or if I had to transition this work.

I have done thorough integration testing when implementing backends, but not a lot for Manaburn as the issues do get flushed out as it is being used iteratively but it was not close to what I released at Wells Fargo where I would do passes for linting, coding standards, ADA, compliance, negative testing, black/white box, user/data flows, regression testing and load testing… and coordinate all of this with other projects that have moving parts identified and negotiated to sync, and then prep the dashboard to monitor when we deployed to production for 30 day support (we have a warranty!) before we transitioned it over to production support.

I do work well in cycles, intense development for a few weeks and a break and cycle through, and it is my de-facto mode when I am inspired, I do take the down days to document. If the environment was more like a marathon (infinitely long), I would need to implement a different strategy of firm discipline regimen, to get a run in every morning and meal prep for the week before starting my coding marathon and going to bed a set time daily. It all works to generate more energy for me to focus and burn, I find that my runs do bring metal clarity as well.

Ultimately, I was offered a position as a cofounder in Manaburn’s parent company, Plaiful. I realized that gaming was not a passion of mine, it is a perfect playground to experiment and learn but I cannot see doing it for the next 5-10 years. It did not feel right to take a founder’s equity upon that realization and I stepped away, we could not reach mutual ground on the non-compete but I would abide by it anyway. I do own the entire architecture as of date and have permission to showcase it as a project of mine but I would not necessarily shout it from the rooftops out of regard for the wonderful team at Manaburn. I have been, and will be turning down opportunities for games, out of regard for myself and Manaburn.