Everyone is making it too easy, banners flashing rag training ads “rag train here with us, it’s so easy, drop your data here, drop your model here” and like a vending machine, BOOM! here is your trained model.

Having worked with large amounts of data, it takes days just to find, filter and screen the data properly, I can already tell this is going to be the most time consumptive portion. The training looks fairly easy, if you can decide on the most cost efficient GPU (who? where?) to train. The loras I like to use are basically a summation of the type of images I like across the board. It would be awesome if we can tag digital assets with ownership, so artists can get a piece of the pie instead of eternally being infringed upon. I suppose that is a trillion dollar asset if there is a solution to it.

I guess the next question is: what type of hardware setup do I need to do agentic AIs? I have a list of models that

I need to deploy to get what I want: specifically encoder/decoder models for various text, image tasks in particular

domains.

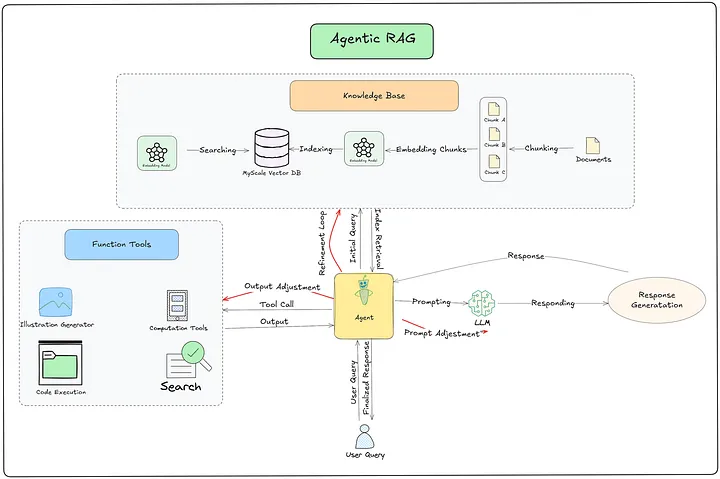

Pipeline for Core Stages

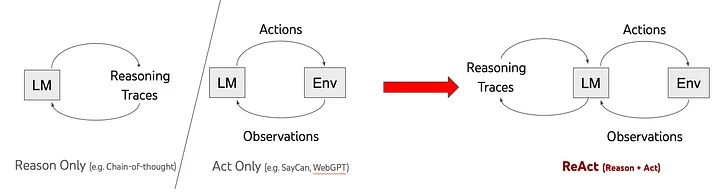

copied from https://medium.com/@myscale/a-beginners-guide-on-agentic-rag-f1254d836063

Pipeline for Core Stages

copied from https://medium.com/@myscale/a-beginners-guide-on-agentic-rag-f1254d836063

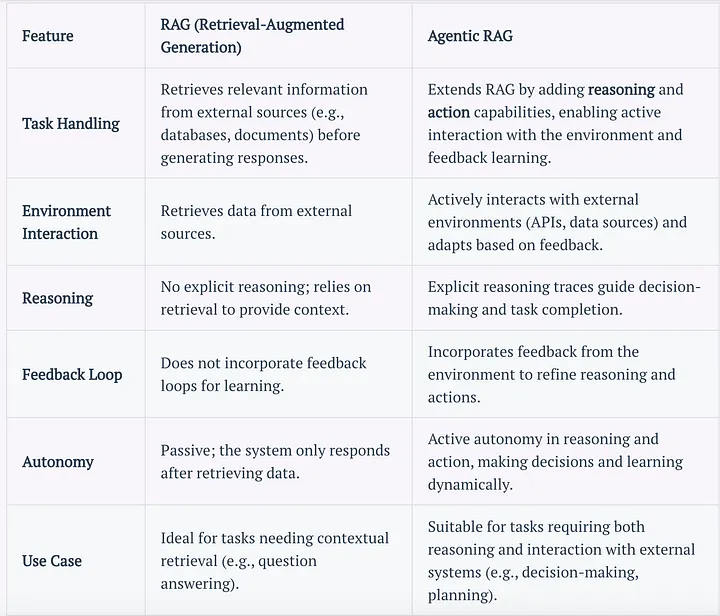

Comparison table between the two:

Comparison table between the two: